QuakeC VM

Wednesday, 15th August 2007

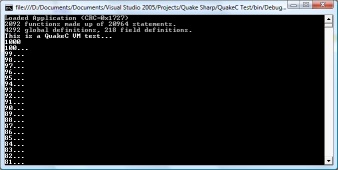

I've started serious work on the QuakeC virtual machine.

The bytecode is stored in a single file, progs.dat. It is made up of a number of different sections:

- Definitions data - an unformatted block of data containing a mixture of floating point values, integers and vectors.

- Statements - individual instructions, each made up of four short integers. Each statement has an operation code and up to three arguments. These arguments are typically pointers into the definitions data block.

- Functions - these provide a function name, a source file name, storage requirements for local variables and the address of the first statement.

On top of that are two tables that break down the definitions table into global and field variables (as far as I'm aware this is only used to print "nice" names for variables when debugging, as it just attaches a type and name to each definition) and a string table.

The first few values in the definition data table are used for predefined values, such as function parameters and return value storage.

Now, a slight problem is how to handle these variables. My initial solution was to read and write types strictly as particular types using the definitions table, but this idea got scrapped when I realised that the QuakeC bytecode uses the vector store opcode to copy string pointers, and a vector isn't much use when you need to print a string.

I now use a special VariablePointer class that internally stores the pointer inside the definition data block, and provides properties for reading and writing using the different formats.

/// <summary>Defines a variable.</summary> public class VariablePointer { private readonly uint Offset; private readonly QuakeC Source; private void SetStreamPos() { this.Source.DefinitionsDataReader.BaseStream.Seek(this.Offset, SeekOrigin.Begin); } public VariablePointer(QuakeC source, uint offset) { this.Source = source; this.Offset = offset; } #region Read/Write Properties /// <summary>Gets or sets a floating-point value.</summary> public float Float { get { this.SetStreamPos(); return this.Source.DefinitionsDataReader.ReadSingle(); } set { this.SetStreamPos(); this.Source.DefinitionsDataWriter.Write(value); } } /// <summary>Gets or sets an integer value.</summary> public int Integer { get { this.SetStreamPos(); return this.Source.DefinitionsDataReader.ReadInt32(); } set { this.SetStreamPos(); this.Source.DefinitionsDataWriter.Write(value); } } /// <summary>Gets or sets a vector value.</summary> public Vector3 Vector { get { this.SetStreamPos(); return new Vector3(this.Source.DefinitionsDataReader.BaseStream); } set { this.SetStreamPos(); this.Source.DefinitionsDataWriter.Write(value.X); this.Source.DefinitionsDataWriter.Write(value.Y); this.Source.DefinitionsDataWriter.Write(value.Z); } } #endregion #region Extended Properties public bool Boolean { get { return this.Float != 0f; } set { this.Float = value ? 1f : 0f; } } #endregion #region Read-Only Properties /// <summary>Gets a string value.</summary> public string String { get { return this.Source.GetString((uint)this.Integer); } } public Function Function { get { return this.Source.Functions[this.Integer]; } } #endregion }

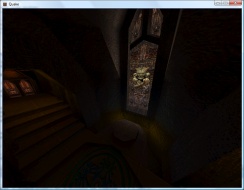

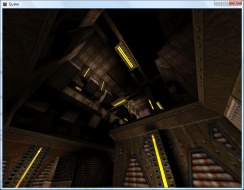

If the offset for a statement is negative in a function, that means that the function being called is an internally-implemented one. The source code for the test application in the screenshot at the top of this entry is as follows:

float testVal; void() test = { dprint("This is a QuakeC VM test...\n"); testVal = 100; dprint(ftos(testVal * 10)); dprint("\n"); while (testVal > 0) { dprint(ftos(testVal)); testVal = testVal - 1; dprint("...\n"); } dprint("Lift off!"); };

There's a huge amount of work to be done here, especially when it comes to entities (not something I've looked at at all). All I can say is that I'm very thankful that the .qc source code is available and the DOS compiler runs happily under Windows - they're going to be handy for testing.

Vista and MIDI

Tuesday, 14th August 2007

I have a Creative Audigy SE sound card, which provides hardware MIDI synthesis. However, under Vista, there was no way (that I could see) to change the default MIDI output device to this card, meaning that all apps were using the software synthesiser instead.

Vista MIDI Fix is a 10-minute application I wrote to let me easily change the default MIDI output device. Applications which use MIDI device 0 still end up with the software synthesiser, unfortunately.

To get the hardware MIDI output device available I needed to install Creative's old XP drivers, and not the new Vista ones from their site. This results in missing CMSS, but other features - such as bass redirection, bass boost, 24-bit/96kHz output and the graphical equaliser - now work.

The Creative mixer either crashes or only displays two volume sliders (master and CD audio), which means that (as far as I can tell) there's no easy way to enable MIDI Reverb and MIDI Chorus.

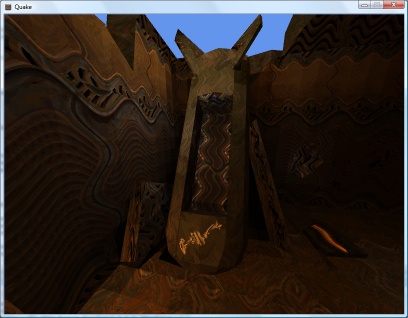

Quake 2 PVS, Realigned Lightmaps and Colour Lightmaps

Friday, 10th August 2007

Quake 2 stores its visibility lists differently to Quake 1 - as close leaves on the BSP tree will usually share the same visibility information, the lists are grouped into clusters (Quake 1 stored a visibility list for every leaf). Rather than go from the camera's leaf to find all of the other visible leaves directly, you need to use the leaf's cluster index to look up which other clusters are visible, then search through the other leaves to find out which reference that cluster too.

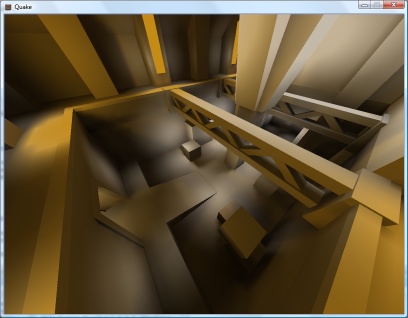

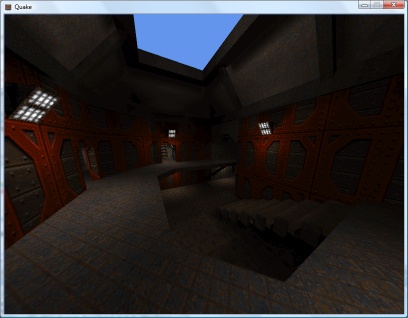

In a nutshell, I now use the visibility cluster information in the BSP to cull large quantities of hidden geometry, which has raised the framerate from 18FPS (base1.bsp) to about 90FPS.

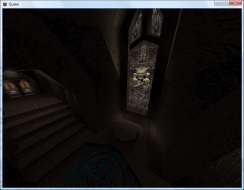

I had a look at the lightmap code again. Some of the lightmaps appeared to be off-centre (most clearly visible when there's a small light bracket on a wall casting a sharp inverted V shadow on the wall underneath it, as the tip of the V drifted to one side). On a whim, I decided that if the size of the lightmap was rounded to the nearest 16 diffuse texture pixels, one could assume that the top-left corner was not at (0,0) but offset by 8 pixels to centre the texture. This is probably utter nonsense, but plugging in the offset results in almost completely smooth lightmaps, like the screenshot above.

I quite like Quake 2's colour lightmaps, and I also quite like the chunky look of the software renderer. I've modified the pixel shader for the best of both worlds. I calculate the three components of the final colour individually, taking the brightness value for the colourmap from one of the three channels in the lightmap.

float4 Result = 1; ColourMapIndex.y = 1 - tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).r; Result.r = tex2D(ColourMapSampler, ColourMapIndex).r; ColourMapIndex.y = 1 - tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).g; Result.g = tex2D(ColourMapSampler, ColourMapIndex).g; ColourMapIndex.y = 1 - tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).b; Result.b = tex2D(ColourMapSampler, ColourMapIndex).b; return Result;

Journals need more animated GIFs

Thursday, 9th August 2007

Pixel shaders are fun.

I've implemented support for decoding mip-maps from mip textures (embedded in the BSP) and from WAL files (external).

Now, I know that non-power-of-two textures are naughty. Quake uses a number of them, and when loading textures previously I've just let Direct3D do its thing which has appeared to work well.

However, now that I'm directly populating the entire texture, mip-maps and all, I found that Texture2D.SetData was throwing exceptions when I was attempting to shoe-horn in a non-power-of-two texture. Strange. I hacked together a pair of extensions to the Picture class - GetResized(width, height) which returns a resized picture (nearest-neighbour, naturally) - and GetPowerOfTwo(), which returns a picture scaled up to the next power-of-two size if required.

All textures now load correctly, and I can't help but notice that the strangely distorted textures - which I'd put down to crazy texture coordinates - now render correctly! It turns out that all of the distorted textures were non-power-of-two.

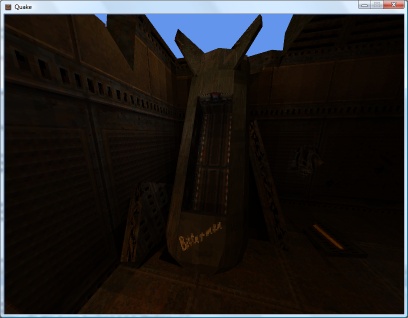

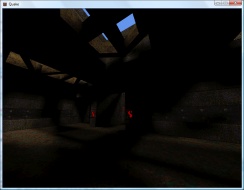

The screenshots above demonstrate that Quake 2 is also handled by the software-rendering simulation. The current effect file for the world is as follows:

uniform extern float4x4 WorldViewProj : WORLDVIEWPROJECTION; uniform extern float Time; uniform extern bool Rippling; uniform extern texture DiffuseTexture; uniform extern texture LightMapTexture; uniform extern texture ColourMap; struct VS_OUTPUT { float4 Position : POSITION; float2 DiffuseTextureCoordinate : TEXCOORD0; float2 LightMapTextureCoordinate : TEXCOORD1; float3 SourcePosition: TEXCOORD2; }; sampler DiffuseTextureSampler = sampler_state { texture = <DiffuseTexture>; mipfilter = POINT; }; sampler LightMapTextureSampler = sampler_state { texture = <LightMapTexture>; mipfilter = LINEAR; minfilter = LINEAR; magfilter = LINEAR; }; sampler ColourMapSampler = sampler_state { texture = <ColourMap>; addressu = CLAMP; addressv = CLAMP; }; VS_OUTPUT Transform(float4 Position : POSITION0, float2 DiffuseTextureCoordinate : TEXCOORD0, float2 LightMapTextureCoordinate : TEXCOORD1) { VS_OUTPUT Out = (VS_OUTPUT)0; // Transform the input vertex position: Out.Position = mul(Position, WorldViewProj); // Copy the other values straight into the output for use in the pixel shader. Out.DiffuseTextureCoordinate = DiffuseTextureCoordinate; Out.LightMapTextureCoordinate = LightMapTextureCoordinate; Out.SourcePosition = Position; return Out; } float4 ApplyTexture(VS_OUTPUT vsout) : COLOR { // Start with the original diffuse texture coordinate: float2 DiffuseCoord = vsout.DiffuseTextureCoordinate; // If the surface is "rippling", wobble the texture coordinate. if (Rippling) { float2 RippleOffset = { sin(Time + vsout.SourcePosition.x / 32) / 8, cos(Time + vsout.SourcePosition.z / 32) / 8 }; DiffuseCoord += RippleOffset; } // Calculate the colour map look-up coordinate from the diffuse and lightmap textures: float2 ColourMapIndex = { tex2D(DiffuseTextureSampler, DiffuseCoord).a, 1 - (float)tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).rgba }; // Look up and return the value from the colour map. return tex2D(ColourMapSampler, ColourMapIndex).rgba; } technique TransformAndTexture { pass P0 { vertexShader = compile vs_2_0 Transform(); pixelShader = compile ps_2_0 ApplyTexture(); } }

It would no doubt be faster to have two techniques; one for rippling surfaces and one for still surfaces. It is, however, easier to use the above and switch the rippling on and off when required (rather than group surfaces and switch techniques). Given that the framerate rises from ~135FPS to ~137FPS on my video card if I remove the ripple effect altogether, it doesn't seem worth it.

Sorting out the order in which polygons are drawn looks like it's going to get important, as I need to support alpha-blended surfaces for Quake 2, and there are some nasty areas of Z-fighting cropping up.

Alpha-blending in 8-bit? Software Quake didn't support any sort of alpha blending (hence the need to re-vis levels for use with Quake GL as underneath the opaque waters were marked as invisible), and Quake 2 has a data file that maps 16-bit colour values to 8-bit palette indices. Quake 2 also had a "stipple alpha" mode used a dither pattern to handle the two translucent surface opacities (1/3 and 2/3 ratios).

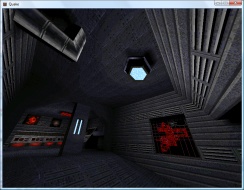

Shaders

Tuesday, 7th August 2007

Following sirob's prompting, I dropped the BasicEffect for rendering and rolled my own effect. After seeing the things that could be done with them (pixel and vertex shaders) I'd assumed they'd be hard to put together, and that I'd need to change my code significantly.

In reality all I've had to do is copy and paste the sample from the SDK documentation, load it into the engine (via the content pipeline), create a custom vertex declaration to handle two sets of texture coordinates (diffuse and lightmap) and strip out all of the duplicate code I had for creating and rendering from two vertex arrays.

[StructLayout(LayoutKind.Sequential)] public struct VertexPositionTextureDiffuseLightMap { public Xna.Vector3 Position; public Xna.Vector2 DiffuseTextureCoordinate; public Xna.Vector2 LightMapTextureCoordinate; public VertexPositionTextureDiffuseLightMap(Xna.Vector3 position, Xna.Vector2 diffuse, Xna.Vector2 lightMap) { this.Position = position; this.DiffuseTextureCoordinate = diffuse; this.LightMapTextureCoordinate = lightMap; } public readonly static VertexElement[] VertexElements = new VertexElement[]{ new VertexElement(0, 0, VertexElementFormat.Vector3, VertexElementMethod.Default, VertexElementUsage.Position, 0), new VertexElement(0, 12, VertexElementFormat.Vector2, VertexElementMethod.Default, VertexElementUsage.TextureCoordinate, 0), new VertexElement(0, 20, VertexElementFormat.Vector2, VertexElementMethod.Default, VertexElementUsage.TextureCoordinate, 1) }; }

uniform extern float4x4 WorldViewProj : WORLDVIEWPROJECTION; uniform extern texture DiffuseTexture; uniform extern texture LightMapTexture; uniform extern float Time; struct VS_OUTPUT { float4 Position : POSITION; float2 DiffuseTextureCoordinate : TEXCOORD0; float2 LightMapTextureCoordinate : TEXCOORD1; }; sampler DiffuseTextureSampler = sampler_state { Texture = <DiffuseTexture>; mipfilter = LINEAR; }; sampler LightMapTextureSampler = sampler_state { Texture = <LightMapTexture>; mipfilter = LINEAR; }; VS_OUTPUT Transform(float4 Position : POSITION, float2 DiffuseTextureCoordinate : TEXCOORD0, float2 LightMapTextureCoordinate : TEXCOORD1) { VS_OUTPUT Out = (VS_OUTPUT)0; Out.Position = mul(Position, WorldViewProj); Out.DiffuseTextureCoordinate = DiffuseTextureCoordinate; Out.LightMapTextureCoordinate = LightMapTextureCoordinate; return Out; } float4 ApplyTexture(VS_OUTPUT vsout) : COLOR { float4 DiffuseColour = tex2D(DiffuseTextureSampler, vsout.DiffuseTextureCoordinate).rgba; float4 LightMapColour = tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).rgba; return DiffuseColour * LightMapColour; } technique TransformAndTexture { pass P0 { vertexShader = compile vs_2_0 Transform(); pixelShader = compile ps_2_0 ApplyTexture(); } }

Of course, now I have that up and running I might as well have a play with it...

By adding up and dividing the individual RGB components of the lightmap texture by three you can simulate the monochromatic lightmaps used by Quake 2's software renderer. Sadly I know not of a technique to go the other way and provide colourful lightmaps for Quake 1. ![]() Not very interesting, though.

Not very interesting, though.

I've always wanted to do something with pixel shaders as you get to play with tricks that are a given in software rendering with the speed of dedicated hardware acceleration. I get the feeling that the effect (or a variation of it, at least) will be handy for watery textures.

float4 ApplyTexture(VS_OUTPUT vsout) : COLOR {

float2 RippledTexture = vsout.DiffuseTextureCoordinate;

RippledTexture.x += sin(vsout.DiffuseTextureCoordinate.y * 16 + Time) / 16;

RippledTexture.y += sin(vsout.DiffuseTextureCoordinate.x * 16 + Time) / 16;

float4 DiffuseColour = tex2D(DiffuseTextureSampler, RippledTexture).rgba;

float4 LightMapColour = tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).rgba;

return DiffuseColour * LightMapColour;

}

My code is no doubt suboptimal (and downright stupid).

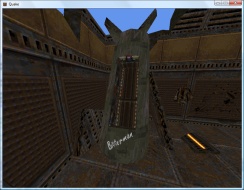

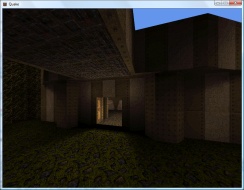

Naturally, I needed to try and duplicate Scet's software rendering simulation trick. ![]()

The colour map (gfx/colormap.lmp) is a 256×64 array of bytes. Each byte is an index to a colour palette entry, on the X axis is the colour and on the Y axis is the brightness: ie, RGBColour = Palette[ColourMap[DiffuseColour, Brightness]]. I cram the original diffuse colour palette index into the (unused) alpha channel of the ARGB texture, and leave the lightmaps untouched.

float2 LookUp = 0; LookUp.x = tex2D(DiffuseTextureSampler, vsout.DiffuseTextureCoordinate).a; LookUp.y = (1 - tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).r) / 4; return tex2D(ColourMapTextureSampler, LookUp);

As I'm not loading the mip-maps (and am letting Direct3D handle generation of mip-maps for me) I have to disable mip-mapping for the above to work, as otherwise you'd end up with non-integral palette indices. The results are therefore a bit noisier in the distance than in vanilla Quake, but I like the 8-bit palette look. At least the fullbright colours work.