Journals need more animated GIFs

Thursday, 9th August 2007

Pixel shaders are fun.

I've implemented support for decoding mip-maps from mip textures (embedded in the BSP) and from WAL files (external).

Now, I know that non-power-of-two textures are naughty. Quake uses a number of them, and when loading textures previously I've just let Direct3D do its thing which has appeared to work well.

However, now that I'm directly populating the entire texture, mip-maps and all, I found that Texture2D.SetData was throwing exceptions when I was attempting to shoe-horn in a non-power-of-two texture. Strange. I hacked together a pair of extensions to the Picture class - GetResized(width, height) which returns a resized picture (nearest-neighbour, naturally) - and GetPowerOfTwo(), which returns a picture scaled up to the next power-of-two size if required.

All textures now load correctly, and I can't help but notice that the strangely distorted textures - which I'd put down to crazy texture coordinates - now render correctly! It turns out that all of the distorted textures were non-power-of-two.

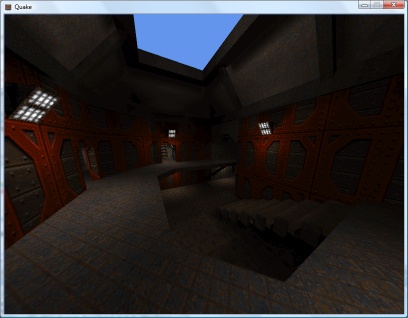

The screenshots above demonstrate that Quake 2 is also handled by the software-rendering simulation. The current effect file for the world is as follows:

uniform extern float4x4 WorldViewProj : WORLDVIEWPROJECTION; uniform extern float Time; uniform extern bool Rippling; uniform extern texture DiffuseTexture; uniform extern texture LightMapTexture; uniform extern texture ColourMap; struct VS_OUTPUT { float4 Position : POSITION; float2 DiffuseTextureCoordinate : TEXCOORD0; float2 LightMapTextureCoordinate : TEXCOORD1; float3 SourcePosition: TEXCOORD2; }; sampler DiffuseTextureSampler = sampler_state { texture = <DiffuseTexture>; mipfilter = POINT; }; sampler LightMapTextureSampler = sampler_state { texture = <LightMapTexture>; mipfilter = LINEAR; minfilter = LINEAR; magfilter = LINEAR; }; sampler ColourMapSampler = sampler_state { texture = <ColourMap>; addressu = CLAMP; addressv = CLAMP; }; VS_OUTPUT Transform(float4 Position : POSITION0, float2 DiffuseTextureCoordinate : TEXCOORD0, float2 LightMapTextureCoordinate : TEXCOORD1) { VS_OUTPUT Out = (VS_OUTPUT)0; // Transform the input vertex position: Out.Position = mul(Position, WorldViewProj); // Copy the other values straight into the output for use in the pixel shader. Out.DiffuseTextureCoordinate = DiffuseTextureCoordinate; Out.LightMapTextureCoordinate = LightMapTextureCoordinate; Out.SourcePosition = Position; return Out; } float4 ApplyTexture(VS_OUTPUT vsout) : COLOR { // Start with the original diffuse texture coordinate: float2 DiffuseCoord = vsout.DiffuseTextureCoordinate; // If the surface is "rippling", wobble the texture coordinate. if (Rippling) { float2 RippleOffset = { sin(Time + vsout.SourcePosition.x / 32) / 8, cos(Time + vsout.SourcePosition.z / 32) / 8 }; DiffuseCoord += RippleOffset; } // Calculate the colour map look-up coordinate from the diffuse and lightmap textures: float2 ColourMapIndex = { tex2D(DiffuseTextureSampler, DiffuseCoord).a, 1 - (float)tex2D(LightMapTextureSampler, vsout.LightMapTextureCoordinate).rgba }; // Look up and return the value from the colour map. return tex2D(ColourMapSampler, ColourMapIndex).rgba; } technique TransformAndTexture { pass P0 { vertexShader = compile vs_2_0 Transform(); pixelShader = compile ps_2_0 ApplyTexture(); } }

It would no doubt be faster to have two techniques; one for rippling surfaces and one for still surfaces. It is, however, easier to use the above and switch the rippling on and off when required (rather than group surfaces and switch techniques). Given that the framerate rises from ~135FPS to ~137FPS on my video card if I remove the ripple effect altogether, it doesn't seem worth it.

Sorting out the order in which polygons are drawn looks like it's going to get important, as I need to support alpha-blended surfaces for Quake 2, and there are some nasty areas of Z-fighting cropping up.

Alpha-blending in 8-bit? Software Quake didn't support any sort of alpha blending (hence the need to re-vis levels for use with Quake GL as underneath the opaque waters were marked as invisible), and Quake 2 has a data file that maps 16-bit colour values to 8-bit palette indices. Quake 2 also had a "stipple alpha" mode used a dither pattern to handle the two translucent surface opacities (1/3 and 2/3 ratios).